Building a Big Diverse Data Platform for Wall Street

The entire capital markets industry is digging in on the big data hype or what I’m calling business intelligence optimization (BIO). I touched on this change in wording in an earlier piece and it’s not focused on ‘big data’ but the process to harvest and exploit new knowledge – regardless of where the data comes from or what type it is.

The entire capital markets industry is digging in on the big data hype or what I’m calling business intelligence optimization (BIO). I touched on this change in wording in an earlier piece and it’s not focused on ‘big data’ but the process to harvest and exploit new knowledge – regardless of where the data comes from or what type it is.

In reviewing this definition, there is no panacea for raising the analytical quotient in capital markets. There is no big data analytics in a box. There are, however, components or architectural ingredients that are essential in getting value throughout the information supply chain. And, how are those insights operationalized to best impact the bottom line? There are approaches that offer different ways to engineer or build a platform to enable this that can be more straightforward and others with added complexity.

To build a successful BIO platform, start by understanding:

- Talent is scarce in the data science/quant market to leverage and utilize these data and tools.

- Standards, data quality and governance are changing rapidly and not fully formed, so deployment and management must be taken in measured steps.

- Some companies may not be internally built for BIO. A data driven culture that is willing to experiment and understand there are some activities that can generate immediate ROI and others that will take some time is required. It’s called continuous improvement and failing fast, and some firms will not be able to access fast insight.

- It’s not easy. Firms have difficulty mapping and stepping through the fundamental factors for any project – people, process and a clear vision and path for a “future state” or “next generation” platform – and BIO is more complex than most projects.

- Let’s not mince words – it’s hard. This will take dedication. The “edge” firms can gain takes hard work, time and patience. Gartner suggests that only 20% of the global 1,000 organization having a strategic focus on “information infrastructures” by 2015.

Is there a path of least resistance? At this time and for the near-term future, a unified data architecture (UDA) is one of the best ways to build a platform that not only enables and operationalizes analytics, big data, BIO or information – whatever term is used – but also leverage existing IT assets. The goal is to take current systems, introduce new technology only when needed maintaining flexibility so the company can rapidly develop applications and analytics that result in better performance. This is a hybrid ecosystem.

The components of a UDA include open source Hadoop, a discovery platform and an integrated data warehouse. Hadoop is an excellent solution for data staging, preprocessing and simple analytics among other applications. The data discovery platform should enable rapid exploration of data using a variety of analytic embedded techniques accessible by business users. And to operationalize findings and insights, a warehouse puts factors, rules or other outputs from Hadoop and the discovery platform into an integrated environment for production to be used across the organization or for a specific business project or unit.

The current combination of components are not just adding value, but also enabling speed to market. A UDA is a sound approach that injects new complimentary components, but does not compete with the fundamental architecture. Rather it enables firms to fill voids in their systems and create the capabilities to rapidly develop analytics and applications. Most importantly, it delivers business intelligence that is not just interesting, but actionable.

The current combination of components are not just adding value, but also enabling speed to market. A UDA is a sound approach that injects new complimentary components, but does not compete with the fundamental architecture. Rather it enables firms to fill voids in their systems and create the capabilities to rapidly develop analytics and applications. Most importantly, it delivers business intelligence that is not just interesting, but actionable.

All the speed, analytics, insights and connectivity are nice, yet without an actionable outcome to improve the business, the former is just a piecemeal approach that is creating an expensive big data science experiment.

Capital Markets current approach to managing Intelligence Optimization and not big, diverse data

The dialogue on big, diverse data has been changing in capital markets during the last few months, and for the better. The name is changing and not just due to over usage and hype, but because firms are experimenting and definitions and applications are shifting away from the old definition. At the moment firms are seeing all types of data with an advanced analytics platform and the application of findings can drive value and hold the promise to what folks have been clamoring about. It’s more a process to harvest and exploit untapped knowledge, which is more about business insights or intelligence optimization – BIO versus big data.

The dialogue on big, diverse data has been changing in capital markets during the last few months, and for the better. The name is changing and not just due to over usage and hype, but because firms are experimenting and definitions and applications are shifting away from the old definition. At the moment firms are seeing all types of data with an advanced analytics platform and the application of findings can drive value and hold the promise to what folks have been clamoring about. It’s more a process to harvest and exploit untapped knowledge, which is more about business insights or intelligence optimization – BIO versus big data.

The dialogue on big, diverse data has been changing in capital markets during the last few months, and for the better. First, the name is changing and not just due to over usage and hype, but because firms are experimenting and definitions and applications are shifting away from the old definition – if there ever really was one. The V’s (velocity, variety, volume) are just some of the characteristics identified years ago about data, so they really fail to match what many firms are currently experiencing. At the moment firms are seeing all types of data with an advanced analytics platform and the application of findings can drive value and hold the promise to what folks have been clamoring about. It’s more a process to harvest and exploit untapped knowledge, than one specific rigid definition – you might even call it something like “business insights or intelligence optimization – BIO.” The process is building a greater level of information for a fact-based approach to decision making and organizing the firm’s capabilities and people with a platform and tools that will add value to the business and customers.

Capital markets firms that are leading adoption of a big, diverse strategy realize having a stated plan and governance in place is a best practice and strong place to start. These companies start in areas with the greatest pain or opportunities to mine first. For capital markets, trading and investment strategies are always top of the list. Risk management and customer insights are also critical areas of exploration, where the application of resources can help improve trading and investment strategies. Remember, correlation is not causality, so traditional variables used in the discovery process may or may not explain or highlight new insights for trading and investment when put together with new information sets. So investment managers and trading firms are growing an appreciation for how the new process reveals alpha or highlights bias in models and algorithms. The process of quickly spotting these small insights, moving on and iterating is another part of the workflow that leading firms employ. Not “boiling the ocean”, so to speak and staying focused is a critical element of the deployment.

An important part of this process is focused on people, the talent pipeline and skill shortage. The competition is fierce for experienced “BIO” talent. Capital markets firms are evaluating how to staff or build teams to manage their big analytics projects. They are working with colleges to promote data science, analytics and math to create the pipeline coming in. Sponsoring workshops and activities with schools to highlight their firms as interesting versus the startup and large tech companies that often attract young talent. Once you get the word out and let graduates know that you have big problems to solve, it makes a creative value proposition for your firm as to not lose qualified candidates to competing industries.

Another approach is to scan internally and find people who already exhibit many of the attributes or skills that are associated with “data scientists.” The precise criteria for data scientists is still developing, but is usually based on skills or traits such as statistics, data mining, being analytical, problem-solving (think detective deductive/inductive reasoning), inquisitive (hypothesis formation), collaborative and communicative. It may seem like a tall order, but perhaps it’s a team rather than an individual.

With your people in place, you may need to centralize, perhaps even separate, a big, diverse data analytics group to foster ideas and prevent infection from a “poisonous corporate” culture that may kill off projects This team may be organized with the sole objective of uncovering answers to some of the business’s tough questions and stumbling upon a finding, no matter the size of the impact, that can move the needle of earnings growth or customer improvement.

The last point to raise is about infrastructure. There are many ways to approach this, but in all of them how the architecture is determined needs to match the above two elements first, rather than the other way around. The reality is that there is no one singular “box” or app that will manage your big, diverse data or BIO project completely. Nor is there a silver bullet that will attract and engage the people across your firm to transform the organization into a data driven culture or create an environment where questions can be generated. Rather it’s the thoughtful combination of existing and new solutions that maps to where your firm is on the information maturity curve, and where it wants to go.

Leading capital markets firms now making progress with “BIO” projects see data as a strategic asset and realize the benefits, regardless of how intangible they may initially appear, will ultimately deliver value. If data is the “life blood or DNA” of the organization as we’ve heard so often, then it would stand to reason the findings and patterns are unique as well. These capital markets firms understand the collaborative gain from democratizing data.

Democratizing data does can come at a cost and it can be confusing to know what the right architecture is as well as paying for it. Conversations about budgeting for new solutions or fashioning older ones to bend to what’s needed takes investment. Chief data officers are making efforts to educate the executive suite, leveraging leading big, diverse data vendor partners and using them to present the latest developments in the industry, delivering tangible value every 3-6 months and having projects fund other projects. The requirement is to keep the corporate mind and wallet open.

Can Group Finance and Risk Management Get Along?

There is renewed move to integrate finance and risk data at sell-side firms for cash optimization and, equally as important, transparency. So how can you get these two groups to cooperate to achieve a unified view?

There is renewed move to integrate finance and risk data at sell-side firms for cash optimization and, equally as important, transparency. So how can you get these two groups to cooperate to achieve a unified view?

For the past few years there has been a renewed interest in integrating finance and risk data at capital markets firms for cash optimization and to gain a grasp on transparency into how that cash is at risk.

This isn’t surprising, as these firms lost several of their profit lines due to changing regulations and economic impacts. Other drivers of the trend include risk reduction and management of scare resources; margin leakage and optimization of capital and liquidity.

Finance and risk professionals would agree that integration of data is valuable to both areas, yet they may not agree on how or where to tackle the task. For example, when trying to reduce financial reconciliations there are a host of post-trade legacy systems with a number of trade feed source issues for finance and risk groups at investment banks. Risk is outputting varying degrees of data quality from their models and finance often has to normalize this information to use it in various reporting layers.

Many finance and risk groups also have scale and time problems related to trade data, valuations, limits and disclosure issues. And, of course, there is a need to do all of this globally and around the clock. Just consolidating these feeds can be the first point of contention, before they even move onto the more complex aspects of integration that need to be resolved, such as data standards and agreeing on definitions.

The multiple source systems can be managed a number of ways. For instance, should it be handled with logical data models, application consolidation or a data warehouse for example?

More importantly, the risk and finance groups need to find a middle ground on some of these aspects so they can gain traction in the process to unifying data.

Moving Mountains

As we know, there are formal, multi-year and very expensive approaches to data management that will result in a fully integrated company, but these are not where firms are getting the best return, output and use of time. Given the mountain to be moved in order to complete a successful finance and risk integration, companies can start small and build iteratively:

Data Governance: Where there isn’t a data governance charter, get the funding to establish one and do it. This doesn’t have to be perfect upfront — it will be a dynamic charter that will change. It should set some basic data strategies and principles for risk and finance groups to chart against along with other units of the business that can leverage this work.

Low-Hanging Fruit: Identify a short list of business activities between risk and finance where costs and inefficiency are high. For instance, trade feeds for financial P&L reconciliations is one common area.

Small Can Be Big: Establish the dollar value associated with these high-cost business activities. Understand that sometimes the problem is so large that an incremental win, say just 2-3%, can wring millions just on in savings in personnel and automation.

Identify the Problem: Organize working groups to identify the business data priorities.

Know Where You Are Going: Roadmap and align business priorities to your data requirements and define some approaches, timetables and responsibilities.

With these initial steps, you will be able to establish successful “sprints” or small starter projects that can move finance, risk and IT to identify and agree on one or more areas to consider.

The next steps, which are infrastructure and application changes, can be made to resolve the risk and finance integration challenges. These steps can help firms avoid stumbling and moving in circles. Capital markets firms are starting to mobilize on the small “sprint” approach. The outcome is an established data strategy foundation to reduce group infighting and quickly evaluate and execute projects that will enable the source systems needed for finance and risk reconciliations.

Companies shouldn’t expect group finance and risk folks to start spending weekends together, but they can get their data to mingle for the betterment of both groups.

Opportunity to not be ineffective with your data strategy in 2013

Data governance is a trend that has moved back to the forefront of the “how to manage information” discussion. A recent WS&T article highlights the use of deeper analytics for trading and points to data management as a central problem for capital markets firms. While deeper analytics is required to propel the business, one glaring issue that is a typically found when analytical platforms are deployed is the lack of completeness, timeliness, accuracy and overall data quality found at many top tier capital markets firms. Yes – still. Seems shocking after so many years of hard work and dollars spent. Many firms still struggle to understand the impact to liquidity and the G/L or balance sheet when doing various trade related functions (e.g. pre-trade scenario analysis) for example.

Certainly, the notion of more data to improve analytics is compelling and there is tremendous value in mining big and small data. However, to make 2013 the year when firms improve their information strategies, formalized efforts should be made to improve data that capital markets firms already work with. The improvement comes from data quality enhancement that is defined and refined by a formalized data governance initiative.

Establishing a data governance charter, program and action group is essential to create organization, definition and agreement between business and IT and avoid mishaps that typically arise from past data quality projects. This step is critical to operationalize data successfully for analytics, and it must be done in a timely manner. Successes are required to reach the deeper analytics needed for understanding where trade and operational profit, cost and customer opportunities exist.

Impetus to formalize data governance programs are no longer just to meet regulations. IT is pressured from the business side to produce outcomes from these multiyear data transformation ‘projects’ as well – they are tired of waiting for results. If providing reliable and actionable data to the business aren’t enough to persuade the firm to truly work through data governance then perhaps market survival is. Some leading sell-side firms have decided to go all in and attempt to tie existing data efforts into a governance program so they can gain the competitive advantages clean data brings.

We’ve recently seen the U.S. congress take a large and fundamentally vital component of the future success of the country’s economic well-being and merely apply a short-term band aid solution. In many ways, data is as important to capital markets firms as tax implications are to the country – let’s not allow firms to head over the data cliff.

Data Defense

This topic came to me as I sadly watched the Jets lose this past weekend when some basic fundamental defense could have won the game – namely protecting the ball!! And got me thinking about how financial firms need to work on their risk “defense.”

Offense is typically the star of the game and draws the hearts, minds and affections of fans, yet many of us know defense wins games too and is equally, if not more important. Who doesn’t know Joe Montana, Michael Jordan, or Babe Ruth? But do you know who Jared Allen is? Or Johnny Bench?

Jared Allen is actually an active defensive end for the Vikings and considered one of the most consistent and best in NFL history. Johnny Bench is considered one of the best defensive catchers in the history of baseball. And one we can remember is Derek Jeter’s miracle defensive flip in the pivotal game 3 of the 2001 ALDS verse Oakland, when the Yankees were faced with elimination. Jeter’s play saved the game and the Yankees went on to win the best of five series – I realize any New England fans will be quick to remind me of that season’s outcome.

In financial markets I see a lot of parallels – except what is remembered from the outcome. When sales, profits, fund performance and other successes “offense“ are big, they make news, move share prices and have dramatic impacts for many companies. However, what news actually lasts in people’s minds? If I mention Citibank what would come to mind given today’s news:

- Citi Analyst Mark Mahaney Terminated Following Facebook Disclosure

- How about JPMC – The London Whale!!

- Barclays – LIBORgate

For these banks and far too many others, risk and compliance is an extremely challenging endeavor. The examples here are very recent and everyone can appreciate risk and compliance requires continuous improvement and how firms are continually on guard. To be fair it’s not just banks – quick what the last news headline you remember about TJMAX?

How can you play better defense? Foremost, the firm’s leadership, people and culture must be prepared to embed awareness into the organization from the top down. This is vital and will continually improve the firms “defensive” strength and take the actions and steps necessary to minimize risk. Getting better at identifying risks, largely the risk that can be managed, is also a vital step. Data breaches, fraud and portfolio exposure are some of the largest risks that firms can and must work on.

This is all easier said than done as changing cultures and the data and information complexity in firms make this task extremely difficult. And rather than choosing either or; changing a culture or improving risk information, your firm must plan to do both. In my sports example, Jeter made an incredible toss to home plate, but none of it matters without Jorge Posada catching that ball.

For risk information there is some block and tackling that needs to happen:

- Improve integration of data across the enterprise and have the ability to peer down to desk level activity. Asking how is the firm unifying its data?

- Speed is also important as data breaches, fraud and market risk never have an off-season. So what are the bottlenecks in your network? Can data load quickly enough?

- The continuous improvement for data comes by ensuring there is a mechanism for dynamic learning that comes from observed outcomes. So explore how to improve rules engines to make the automated decisions better and reflective of changing dynamics inside and out of the company.

- Examining and testing newer data software such as Hadoop to help capture large and various types of data that can improve what is too often missed in risk analysis.

- What data visualization applications are coming out that helps see what’s happening?

- Have strong data models in place that ensure data standards to help reconcile the vast silos of risk and information across the company.

- Absolutely ensure data governance is established, starting or planned as none of the above will work well. Changing cultures and behavior are enormous tasks – so people will need to know data is considered an asset and the information they receive can be trusted

It is fundamental to peer into what your firms are doing with greater confidence. Improving this risk and transparency is not just a lofty notion – it’s also a requirement as regulations have forced the hands of financial markets firms. The costs are high too for getting this wrong as seen below.

Until that ill-fated October 17th evening in 2004 a legion of fans and multiple generations could also tell you the pains of bad defense in Boston.

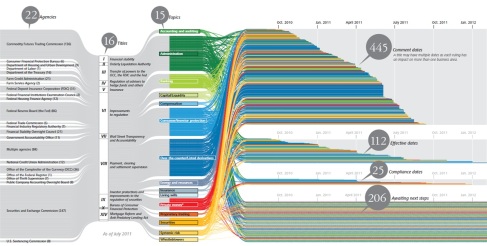

IT Architectural Preparedness of Financial Reform Agencies

It’s no secret that many of the financial service regulatory agencies are underfunded and understaffed when compared to the number of mandates that they are managing (see graphic below.) Wall Street tends to pay a bit better too, so getting the best and brightest can be a challenge in the first place.

Commodity Futures Trading Commission Chairman Gary Gensler himself told lawmakers “We’re way underfunded at the CFTC.” Also in agreement is Securities and Exchange Commission Chairman Mary Schapiro, who also expressed to Congress “We’re still way outgunned by the firms we regulate in terms of technology”

Fundamentally, it seems preposterous that you would assume the ~3,600 some employees at the SEC (a smaller number of this would actually be monitoring Wall Street mind you) could keep pace in real time and see the hits before they come. The reality is more something akin to internet hacking – the police on watch are really only reacting when security breaches happen. Trying to get to a predictive and even real-time level of reading the markets is just not happening at this juncture.

So how do you make the best use of the people you have? Firstly, many of the agencies aren’t looking to outsmart the firms that create financial instruments and deals. Rather, their aim is to hire folks that can look in the rear view and piece together patterns. The second element is giving them the tools to do just that.

The data challenges are such that in a crisis you need cross agency information to be aggregated, standardized and pulled quickly. Also, how do you classify data quickly to map institutional and security concentrations and connections? Data is limited in scope and depth and not easily aggregated across the market. So here are just a couple of the strategies and efforts that the SEC is working on and can consider:

- The Office of Financial Research was created to act as the one data store to serve various agencies

- The Consolidated Audit Trail to be funded by market participants, ran by FINRA and analyzed by the SEC

- Pushing for industry standards: Legal Entity Identifiers (LEI), Unique Swap Identifier (USI) and Unique Product Identifiers (UPI)

- Common market ontology: Enterprise Data Management Council (EDM) efforts to standardize financial metadata – Financial Industry Business Ontology (Language of the Contract)

Many RFPs and infrastructures are being planned right now over the coming 2-3 years, but with the blueprinting and payment for these systems coming from the Street, will they meet the vision and long term needs adequately for the agency missions and long term goals? A recent Wall Street & Technology article goes into some detail on the SEC’s efforts to change its IT infrastructure.

While these changes are underway, the lack of funding and staff will not go unnoticed. Even though the WS&T article highlights the SEC’s tech advances, the JPMorgan Chase ‘London Whale’ trading event still takes place.

It’s understandable that these agencies will never get to a ‘perfect state’ if there even is one. However, to not taking the time to adequately develop the swap data repositories, audit trails and reporting hubs could lead to not only less insight and oversight, but also the likelihood that mismatched systems will fail to achieve the agency missions. Inadequate date stores and unconsolidated data infrastructures may only result in gap stop mandates and newer regulation in subsequent years. Not unlike the too often knee jerk hysterical regulations that follow from a crisis, the fixes fail on many level too. If you’re going to be watching at least buy some decent binoculars.

Five likely outcomes from the BATS IPO withdrawal

The past few days have been great for Goldman Sachs. Why? The Better Alternative Trading System (BATS) has knocked Goldman out of the headlines. In fact, I wonder if Lloyd Blankfein is upset he even wasted some young PR person’s time to write a response letter to the now infamous NY Times OpEd piece in the first place.

For BATS, the past few days have been an absolute nightmare. Friday morning (3/23) the WSJ had a front page headline highlighting the SEC’s high frequency trading probe that mentions BATS a few times regarding its inclusion in the examination. And then later that day, well, BATS flew – into a wall that is. The exchange made its initial public offering (IPO) that fateful Friday morning only to withdraw it, after “technical issues” caused what may have resembled May 6th, 2011 like flash symptoms. BATS has been firing on all cylinders for a while and it’s kind of a shock to see this to be honest.

1. The highlight this time is that the trade monitoring and surveillance measures are still lacking. Further, circuit breakers are still inadequate to backstop the human trade error. This is not the nail in the coffin for HFT, but yet another point that the media will push to spread the firestorm and rage against this segment of trading. I sense that this will push the discussion to add more breakers, when perhaps a new approach to combating trade entry errors needs to be considered.

2. One suggestion that has surfaced is putting the brakes on trade execution speeds. I don’t see the speed of trading slowing down. OK, maybe somehow it could be slightly curbed, but I find it extremely difficult to clamp the pace at which the market operates. The sheer amount of firms and people whose businesses rely on low latency trading is big – think beyond the small group of HFT firms or even BATS, NYSE and NASDAQ. There is also host of technology, telco and banks that are part of the low latent ecosystem that involves HFT for better or worse.

I also think it may be difficult to ignore the trade costs savings that have been achieved and the efficiencies gained by evolving market structure and the low latency race – all things that are continually being fought for investors. Reg NMS cleared the way for increased electronic trading to prosper and why a firm like BATS is even in existence. Further, the late nineties order-handling rules and decimalization in 2001 led to a big drop in transactions costs. It would seem counterproductive to what the markets – and regulators have been working towards over the years.

3. Market structure has evolved due to high speed trading and it continues to. Rather than dialing back to past approaches, there may be tactics forgotten or new ones unexplored to propel the markets forward. I can appreciate the perspective that HFT is deemed as predatory and at the same time this group being a source of liquidity to the market (new age market makers?) The reality is that on a firm by firm basis there is likely truth to both of the aforementioned arguments for or against that lies somewhere in the middle. I certainly hope the SEC does not uncover a wide ranging scandal – my portfolio can’t take many more hits!!

4. What’s guaranteed is that as the latest probe finalizes its examination there will be proposed regulation that will most likely be in the form of increased reporting and/or registration requirements. There’s already discussion coming from Washington regarding this as well. This is by no means anything we will see in 2012 in my opinion. I would expect after comment periods drag out between the Street and regulators, (much like Dodd-Frank) something will be reached that is not as stinging as original proposals. The regulators will likely find they are as confused about what high frequency trading is as much as they are regarding credit default swaps and inevitably pass something more bias towards the street.

5. The end result is hopefully something that will give the public a reassurance that the markets are safe. And perhaps give the buy-side some confidence that their trades aren’t being constantly stepped ahead of and gain a willingness to push investor dollars back into the market. Information leakage is a huge concern, as much as its always been and it’s an environment that can improve.

As for BATS, I would suggest shelving the IPO for a while – perhaps wait until the next major mishap shows up for another financial services firm. Just like Goldman’s press barrage has benefited from BATS timing. So I guess that means BATS will have clearance for its listing in something like a few weeks?! #toughbeingawallstreetplaya

Are new personal investment solutions helping consumers?

Following my recent trip to FinovateFall 2011 conference in New York City I wanted to share some of the new approaches in personal investment solutions, which I define as a broad category that encompasses financial tracking, planning, education, simulated trading, and portfolio screener solutions. These solutions are all designed to aid individual consumers with their financial planning and investing needs. Wall Street Survivor CEO, Rory Olson, summed up the issue nicely, “Consumers are at a critical disadvantage when trying to understand the black box that is the market. This knowledge is needed though to manage a critical aspect of their lives. Many lack even the most basic know-how on market fundamentals at a time when they are required to.”

A table of some of the newer solutions I see in the market that fall into our categorization can be seen below.

While there are many tools currently available, the large majority of them are too disjointed for users to aggregate smartly. Or they fail to offer ‘tips’ or thinking points that helps make a value added personal decision. Alternatively, focused niche sites may leave out the functionality to act on the advice they provide by initiating trades or other financial transactions. Thus, there continues to be gaps in the market that entrepreneurs are attempting to exploit. These startups are pushing new offerings that incorporate different spins on old techniques, they utilize new data sources and are creating new business models. This is a theme we’ve explored in previous write-ups as well: “Retail trading chimps – are the Gorillas being disturbed?”

Here are some of the drivers that I see ushering in new entrants:

- Social media and other Internet collaboration/community channel business models that are enhanced by mining customer data.

- Unstructured data mining tools are being packaged and pushed downmarket, which will make them more easily accessible to the entrepreneur.

- Ubiquity of mobile devices and tablets.

- Increased fees and commissions coming from financial firms.

It may be early to say if these new tools are solving the large financial concerns of consumers, but even if they are only slightly improving the experience and the process, we see it as as marginal improvement and in many cases, quite valuable. Additionally, these new solutions are doing better than bank offerings when it comes to cleaner UI’s, speed, searchabilty and ease of use for customization on the portal or mobile device – all critical aspects that appeal to today’s retail investor.

For the banks working through their retail investor strategies, they should remember that while new sites can offer new ideas and approaches, there are many that do not survive. Examples include shuttered personal finance Web 2.0 sites Wesabe and Rudder, or Cake Financial. Heavy bank/broker regulations and increased liability is also a large factor that slows down larger financial firms from offering these solutions. In the end, these new online solutions can still provide insights and a testing ground into how to improve your firm’s solutions and what customer’s desire.

Merge or die: Exchanges move up the consolidation curve

The NYSE and Deutsche Börse (DB) merger (yes I realize calling it a merger will infuriate some), is a pure scale play and makes sound operating sense. The revenue and cost synergies that are proposed are in line with other historical exchange transactions. Also, the move propels the combined entities forward to meet the ever changing global trading trends while putting some serious pressure on smaller competitors (ATF’s and MTF’s) that have been quick to grab market share. NYSE and DB are moving quickly up the consolidation curve and this deal positions them well to potentially capture back some of that lost market share. The firms both have past deal experience, a global growing footprint, a culture that is accepting of M&A activity and continue to focus more on profitability.

Here are the deal stats (as outlined by Deal Journal):

- Revenues: $5.4 billion combined revenue, based on 2010 figures

- 37% of total revenue from derivatives trading and clearing

- 29% of revenue from cash listings, trading and clearing

- 20% in settlement & custody

- 14% in market data, index & technology services

Costs: The companies said they expected cost synergies of $400 million by the third year after the merger is completed. And, according to the companies, the combined company will wring out at least $133 million of annual revenue synergies.

Here are some more deal specifics – click here

And so you’ve got it handy, click here for recent M&A activity for exchanges

It’s hard to argue the revenue and cost saving projections as it all looks great. Scale and a stronger IT infrastructure will be vital for this deal to be successful over time. Both have been making substantial investments to strongly compete in electronic trading markets. Their brands should also aid in helping push their new ventures while maintaining their core businesses.

Competitively, you have firms like BATS who have quickly grown to be the third largest exchange in the US with roughly 10% of trades. BATS has made acquisition overtures to Chi-X, but has not been met with success thus far. The brisk deal activity over the past week should motivate these two to join soon in order to survive or ultimately they will be acquired and folded into other larger firms. A combined BATS and CHI-X Europe could help maintain a strong market leading position, but separately their long term prospects of going alone are grim.

We could debate how the merger continues to deepen their large cap or institutional bias. What’s important is that NYSE and DB are showing how their M&A experience is helping them stay in the game. How they are making efforts to change and expand their businesses to find new profit pools away from their traditional core businesses. I’ve written in the past how large firms can fall prey to smaller ones very quickly, but NYSE and DB appear to be making the changes necessary to thwart those attacks and stay extremely competitive.

Will strained regulators cut back on Wall Street mandates?

The SEC, CFTC and other agencies are grossly understaffed and overworked so will planned Wall Street reforms need to be cut? This might seem to be a ridiculous notion, but Capital Hill’s rulings have called for a host of new mandates in short order. Dodd-Frank alone has 240 rule making provisions. In 2011 the SEC must write rules for OTC derivatives, private fund advisers, credit rating agencies, the asset-backed securitization market, corporate governance, executive compensation, and whistleblowers provisions. No small task and the system may be showing some cracks.

The Wall Street Journal (WSJ) ran a story yesterday about the budgetary pinch impacting the SEC. It talked about how cuts are leading to fewer investigations, inability to keep talent and maintain pace with new legislation. This story comes one week after Gary Gensler, head of the Commodity Futures Trading Commission, remarked that “No doubt, as we’re human, that some rules may slip” in regard to a long-awaited swap trading mandate plan. Further, Mary Schapiro, SEC chairman, stated in her September 30th testimony to Congress that, “we currently estimate that the SEC will need to add approximately 800 new positions over time in order to carry out the new or expanded responsibilities given to the agency by the legislation.” According to WSJ article the SEC budget will only allow for 131 new staff next year. Based on the laundry list noted above, things are going to get even more hectic at the SEC this coming year.

We’ve previously talked about the imbalance between planned mandates and regulatory agencies ability to police and enforce them. Does this mean mandates will be pulled? Unlikely, however the time periods that have been outlined will certainly be extended. So this may buy time for firms and the IT vendors that offer solutions to incorporate compliance measures. While I don’t see Congress bending on their master reform plans, when agencies are pressed, it’s not hard to see how the actual implementation can fall short of the mark. While this may call into question the impact of the reform, the important note for capital markets firms is that the sting may be far less then what everyone continues to talk about.

The SEC and other agencies ultimately translate legislation into actual rules and communicate it to the Street. Comment periods allow for industry feedback and a chance to give input into the final shaping of these mandates. So, when an agency is short handed and playing catch-up, they might be leaning on the industry a bit more to help craft that actual legislation. Further, there could be potential for some of these mandates to become self-policing activities such as what is seen at watchdogs like FINRA.

You could say Wall Street would gladly pass on any new legislation. The next best thing might be shaping its design. With the stress and strain regulators are facing, that is the likely reality.

- ← Previous

- 1

- 2

- 3

- 4

- Next →